The Future of Space Planners - Part Three

Showcase and casestudy of 2023 tech leaping forward in 2024 - Part three

Click here for part one and here for part two of Query the Realm of 3D...

Diving deeper

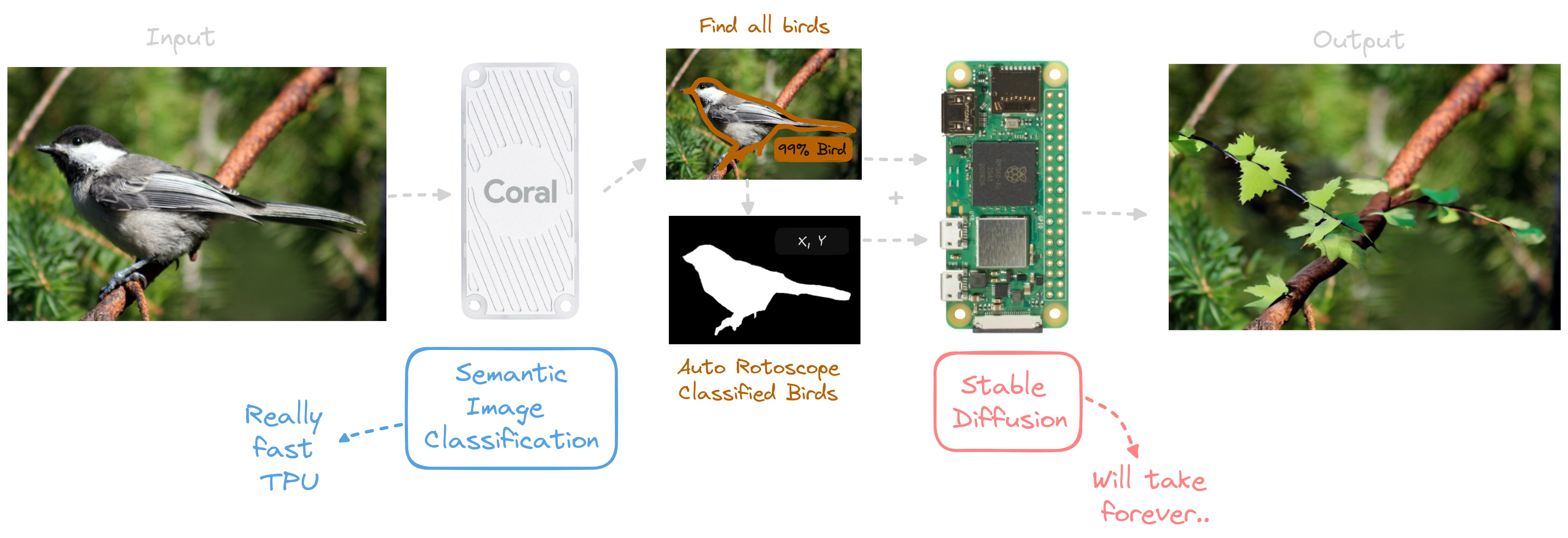

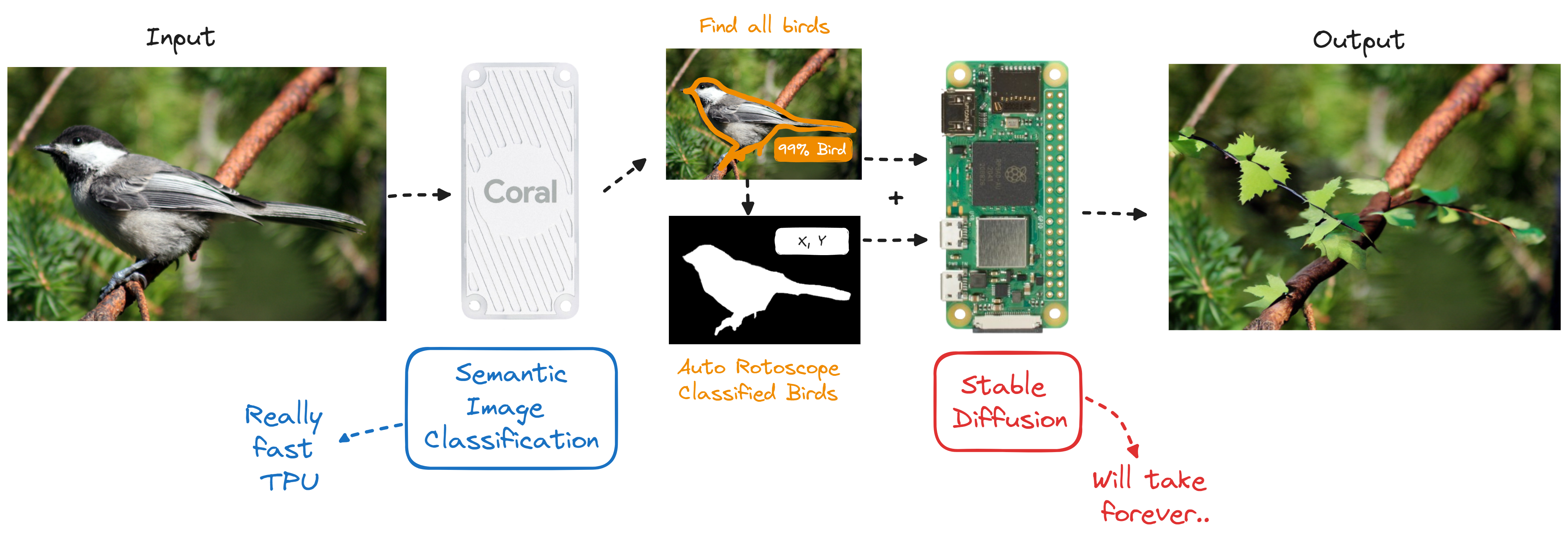

In part two we discovered that we can automate a text book technique from the animation world called rotoscopy. It allows you to erase targetable regions from (digital) images. We also experimented with Birdy where I showed you how we can use Coral AI to partially automate the rotoscopy process. Let me get you back up to speed up real quick.

On the left: Traditional Rotoscopy, the film roll is being projected onto a drawing canvas where masks or elements can be drawn on top of the projected image, when done resulting in the animation on the right.

Source: 1917 Rotoscope Patent | Koko The Clown Rotoscope Animation, 1921

Rotoscopy is a very powerful tool and to educate you, it also holds a dark and more nefarious side. It can also be used to manipulate media as a censorship tool. You can effectively remove anything and in worst case make it look like it never happened or ever existed by f.e: digitally altering video evidence. In this manner rotoscopy is used to effectively create masks that mask the original content of the image. It's the visual equivelant of language censorship. I won't be focussing much on this strategic design aspect of rotoscopy within this case study.

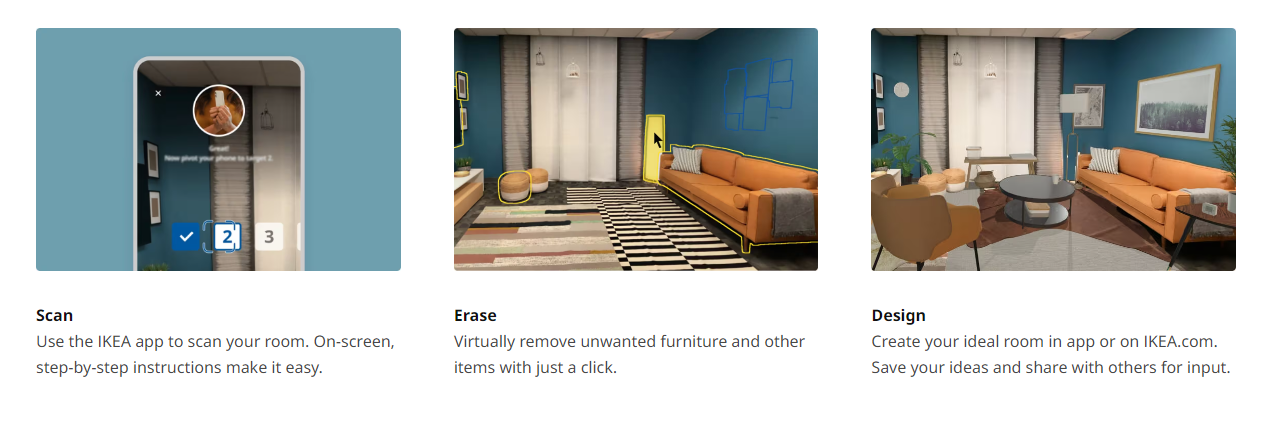

Here's how we can apply rotoscopy to recreate a similar effect like Ikea's Kreativ platform

To recreate the Ikea Kreativ experience on iOS we need to be able to upload an image of the space, have it auto rotoscope your existing furniture and then magic fill what you want out.

Source: Nvidia Research

Introducing AI Diffusion InPainting.

Wouldn't it be great if we could somehow combine the rotoscopy and magic fill process from part two? If we could somehow, compress or combine these two labour intensive steps, it would make life so much easier in terms of creating correct rotoscopy masks for our furniture or Birdy. Well luckilly we can! Let's talk about 'in painting', a extension of Stable Diffusion*.

*Open Source model for Generative Art Design

Inpainting to me at first just sounded like new, lame, marketing AI 'slang', to slap it flat, it automates the entire rotoscopy process where you not only mask the subject but also insert something else via a roto(scopy)mask or a text prompt, or both. I have to admit the name grew on me while writing part three. While exploring how to automate something similar like Ikea's with Birdy, I came to terms and agree that Inpainting is a very suitable name for the particular Stable Diffusion extension.

Here's an example of a 'prompt to Image InPainting' service I made you can try out yourself right here, right now:

Fallback Video:

The above example illustrates how you can selectively apply Stable Difussion too a painted region and on top off that by including prompts you gain more creative leverage.

Alright, back to furniture

Part of Ikea's Kreativ platform focusses on Furniture removal and since we don't have dedicated AI/ ML models readily available for just Furniture classification. We need to create our own specialised model and train it by supplying it with training data. This entire proces looks similar too learning how to operate, drive a car and how to correctly follow traffic rules. In our case we aren't training humans, instead we train a Machine Learning model (digital human), that we slap on a server somewhere on the Internet. Just like humans, we will have to offer it training and we then 'judge'* how good or poorly the model solved the problem. We finetune and re-adjust, training the model until it knows how to safely and properly operate the 'car'** in acceptable, predictable and repeatable manner. *Basically just like the Wax On, Wax Off principle illustrated in Karate Kid(1984). Do this long enough till you're a Pro applies to Machine Learning too. This reference is a gross oversimplification and attempt to nutshell the entire proces, but it offers a birds eye view over all necessary steps. **Replace driving a car with any other task.

However in Ikea and our case we don't want to create Inpaint roto-masks for each image by hand, we can say we've been there and done that if you tried my InPaint example. To automate this proces we can re-use our Coral image classification model from part two. Worker smarter, not harder! Right?

So another feature Coral AI offers is the ability to locally re-train trained models. We can use a broad range of existing models online for different usecases without needing expensive hardware. This too me is a Absolute Win!

Bringing it all together:

From left to right you can see each step being performed on a simple Raspberry Pi device enhanced by the Coral AI USB development kit. The on-board Coral Edge TPU coprocessor is capable of performing 4 trillion operations per second, using only 0.5 watts for each TOPS (2 TOPS per watt). From a conservative power consumption angle, this is ridiculous and terrifying at the same time. Going from smartphones to smarterphones as a potential evolution in portable AI models with minimal on-device energy cost. You could potentially build low-powered solar AI enabled IOT devices.

The drawback however is that both the Coral USB kit and the RPi perform terrible running generative models like Stable Diffusion. To compensate for the performance of the RPi, I opted to only run an API webserver connected to Replicate on the used RPi (we opted to work smarter earlier remember). Replicate.com offers a stable diffusion model within their Free Tier. I simply only feed the Coral Output directly to Replicate. Replicate then outputs and returns the new image within seconds compared to minutes/ hours with my Raspberry Pi.

After you return the output you can offer a hover effect in a app or browser, enable object selection on a per object basis and from there simply remove them. This in essence is what is going on behind the scenes in terms of Ikea's furniture removal feature within their Kreativ platform.

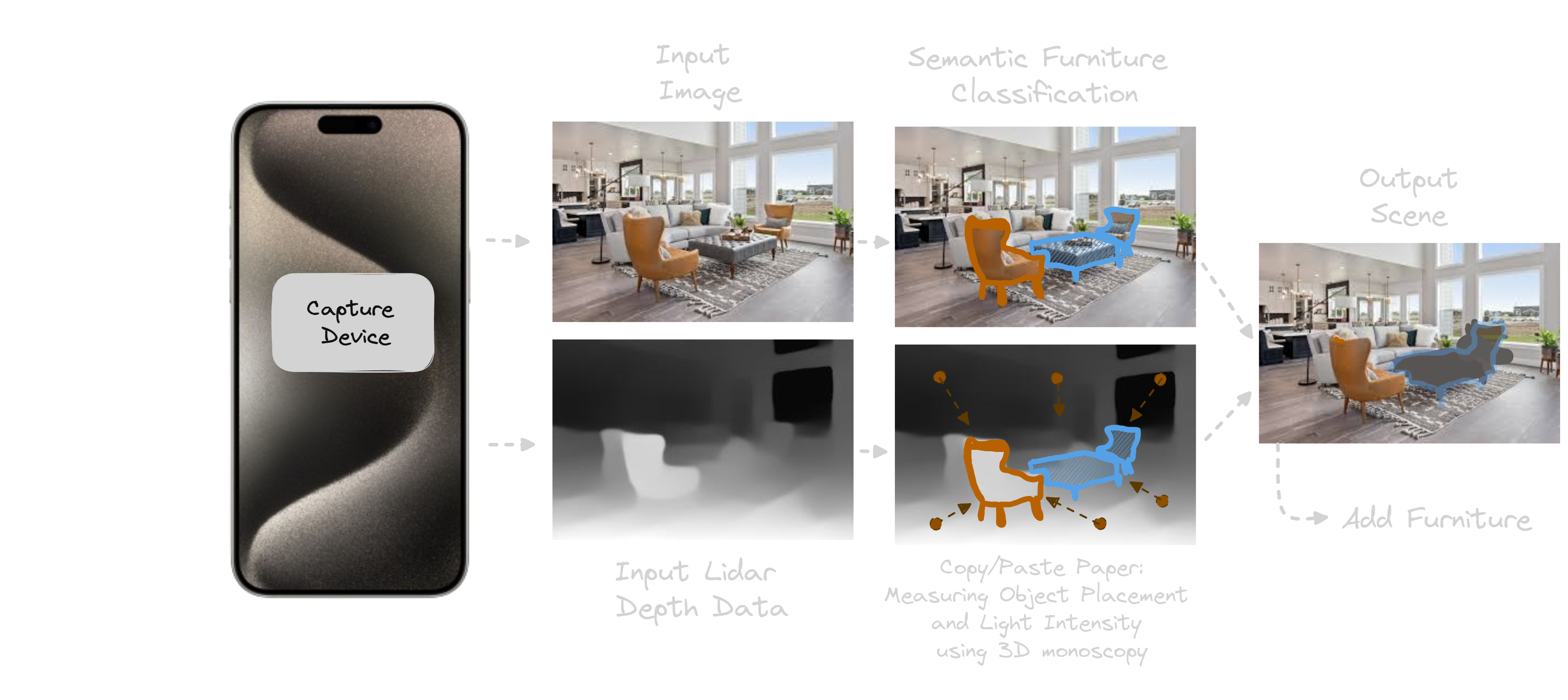

In their app, the yellow lines around furniture represent selectable objects while blue lines represent old furniture that have been 'diffused' using a similar model like Stable Diffusion. This effectively masks the selected furniture and replaces them with a similar looking texture that you would naturally expect to see.

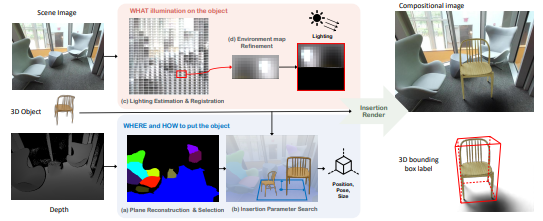

If we take the paper from part two and add this into the equation.

Physically Plausible Object Insertion for Monocular 3D Detection

Authors: Yunhao Ge, Hong-Xing Yu, Cheng Zhao, Yuliang Guo, Xinyu Huang, Liu Ren, Laurent Itti, Jiajun Wu

- Stanford University,

- University of Southern California,

- Bosch Research North America

- Bosch Center for Artificial Intelligence (BCAI)

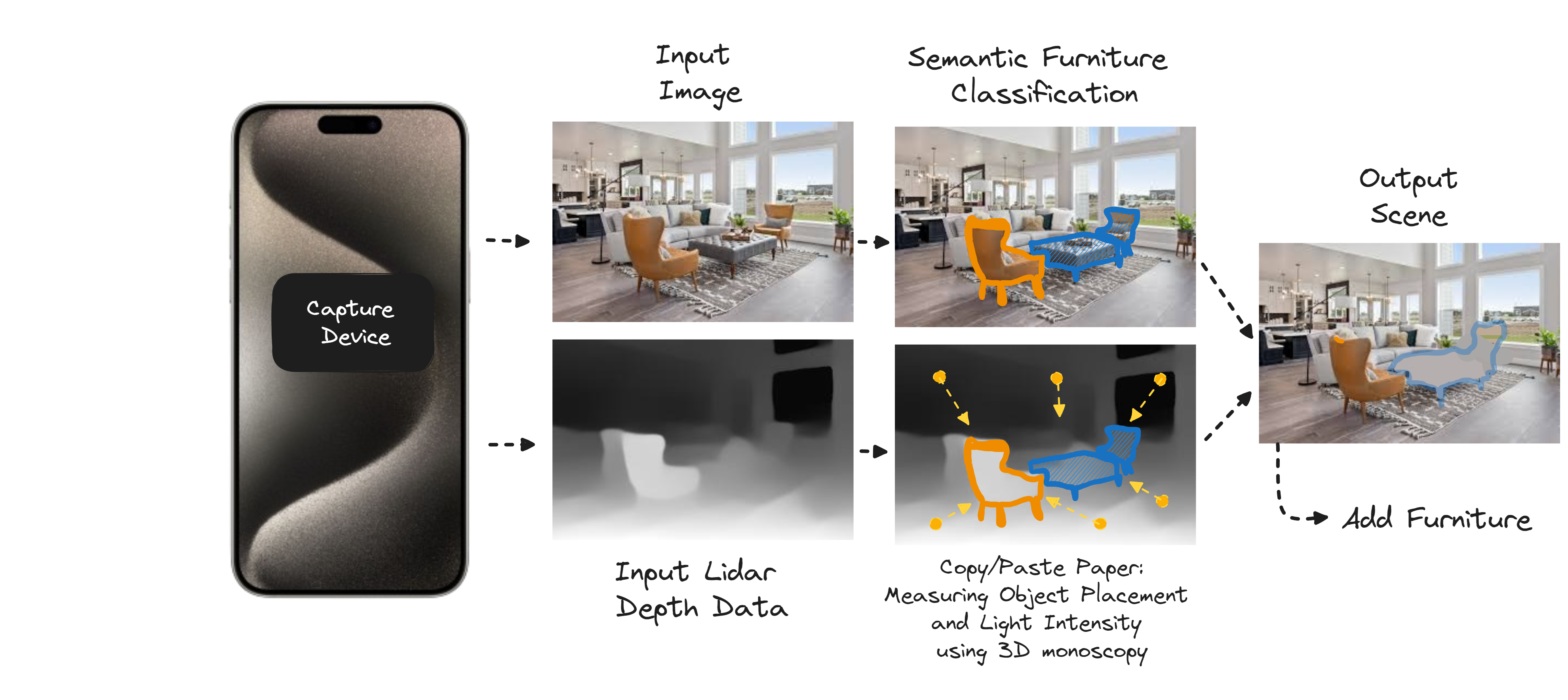

We can essentially go full circle and wrap up the entire workflow:

The beauty of the above solution is that it entirely decouples you from needing a vast 3D publishing catalog API of your existing virtual rooms. You can feed it any 2d image. Eliminating massive amounts of data over the network and storage of said 3D content. You are only left with the storage of your user generated 3D data and imagery, which probably you could just store on the client device of the user (worst case imo, unless you're actually going for cheapskating the entire project). Beyond that the Ikea app gives the user total creative freedom/control by making smart usage of the 3D LIDAR camera on the iPhone Pro for 3D Depth Sensing. The amount of blending ML / AI with classic 3D techniques to create totally new and unique innovative experiences is just very elegant and a display of fine craftmanship that deserves every bit of praise.

An end to a casestudy and another major leap forward, the Ikea Kreativ Room Planner.

Closing words:

I personally gained and learned a great deal during the entire journey the past month, laying out all three parts of the casestudy. Setting up multiple ML models to recreate similar micro-services like the Ikea Kreativ platform. Re-iterating over previous parts: the web configurator is relatively straightforward, the iOS counterpart however was not. All limitations I've intentionally set in place added additional challenges that I had to work around by finding and employing clever solutions of my own. It had to be as cost effective as possible to produce all the functionality over API in a job queue style, basically it had to be free to develop my own custom AI/ML models locally and focussing entirely on being fully decentralized and offline. I however did not succeed in getting the same output speed as Replicate.com on my Raspberry Pi's when firing up Stable Diffusion (this was expected, know your hardware, more importantly know how to work with and around it).

Luckilly for me, Stable Diffusion is another monster on its own that deserves its own casestudy. Who knows... maybe in the future. I got way more exciting things to write about that I am currently developing. We only touched the tip of the tech iceberg. Multi-media specialists never stop growing along with their tools, scavenging/salvaging and repurposing tech or creating an entirely unique frankenstein contraption.

We use it too actively expand our toolbox in order to tackle even more complex challenges, it's addicting. These tools now one by one in turn are getting supercharged through AI, for free. You might think it can't get any better... but from personal experience I didn't think it was possible to get better quality on screen then the Playstation One... until I experienced the Playstation Two. Just to say I strongly believe that we can now effectively start to eliminate all the communication- & media designers and marketeers, the first wave to go down within the creative digital domain. I already see them, just spin up ten of them, AI creative assistants for Art & Technical Directors, taking over by storm and compressing multi-step repetitive tasks into single button shortcuts. Immideatly outputting proper sparkly pixels onto a screen near you. This not only improves up our production capabilities it also creates more room to keep the 'creative juices' flowing by being able to spend more time on the final design and user (journey) experience. Being trained to be digital centipedes, having a dedicated team member to do one particular task is a luxury in my opinion and if you can't do it yourself, find another job... It requires broad knowledge to fully dissect all the hidden layers of the Ikea's Kreativ platform, it combines Web, AI/ML and the world of 3D together. I hope my casestudy and deepdive into this 'trinity of tech' was insightful to you.

I will be bundling the entire showcase and all its parts into a more accessible format as we go. Might compress it down to a single paper PDF.

The Future of Space Planners - Part Two

Showcase and casestudy of 2023 tech leaping forward in 2024, focusing on the iOS side of space planning technology.

Showcase and Casestudy: Query the Realm of 3D

A detailed case study of the Ikea Kreativ Room Designer, exploring the fusion of technology and design in virtual space planning.