The Future of Space Planners - Part Two

Showcase and casestudy of 2023 tech leaping forward in 2024 - Part two

Click read part one for part one of Query the Realm of 3D...

So, where are we situated?

Source: Latest version of the Replica Space Planner (based on Three.js)

Model by: ida61xq @ Sketchfab

Web side of things

iOS side of things

Let's continue where we left off...

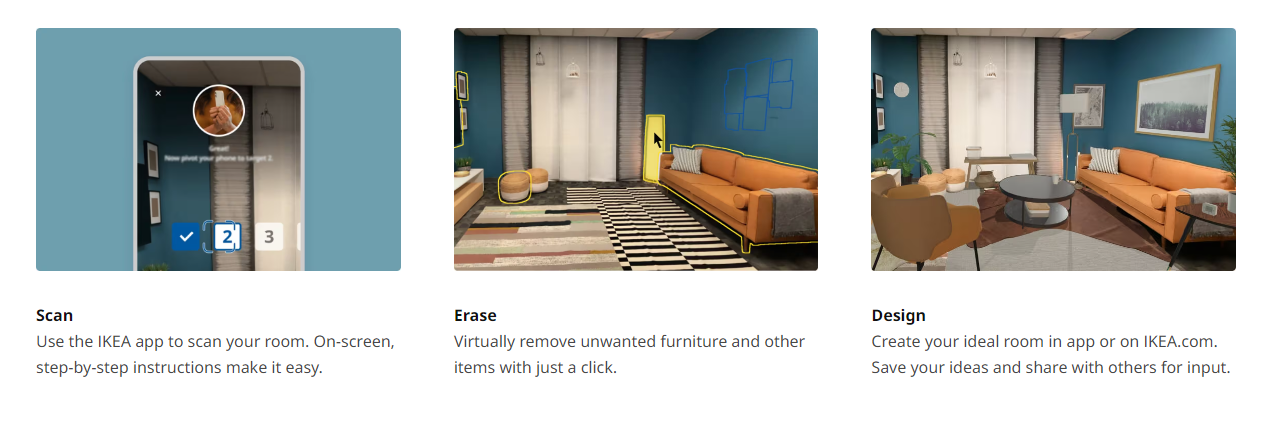

The scan procedure on the iOS App is pretty straightforward. It wants you to take an image of your space, sure. Then as a second step, simply flip your phone on its side and scan the room by following their easy to understand onscreen instructions. Developer Note: This is on the surface looks unnecessary knowing that the LIDAR functionality on the iPhone merges the texture data of the 3D surface while scanning. Source: Apple API Documentation.

Moving on to the 'Erase' part of the process. This in the base in my opinion as a professional, is a absolute fantastic implementation. Just take a picture, wait a bit, come back to the app and you can just select all general 'furniture' in your space, and just yeet it out. Yeetus deletus. Gone with the wind. However... it doesn't look great.... It works... it just doesn't look great... yet. To bluntly strike the nail, it sure is the ugly betty of magic 'photoshop' fill tools to patch up dug holes. But I think, taking into consideration that if you just place a new furniture piece on top... nobody has to know this dirty secret.

So before diving in on how they do it, let me first say upfront that I respect the team behind the technology. The usage is cleverly integrated and beautifully blended in to give you this magical experience.

I however have a strong feeling that they have some real funky things going on there, under the hood so to speak,

there is a chance I could be totally wrong about part one. I recently discovered another route that they could have used that just removes the entire need of having a pre-made 3D scene like their rooms.

It's called:

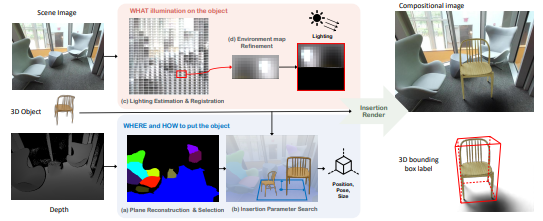

Physically Plausible Object Insertion for Monocular 3D Detection

Authors: Yunhao Ge, Hong-Xing Yu, Cheng Zhao, Yuliang Guo, Xinyu Huang, Liu Ren, Laurent Itti, Jiajun Wu

- Stanford University,

- University of Southern California,

- Bosch Research North America

- Bosch Center for Artificial Intelligence (BCAI)

"In this paper, we explore a novel approach, 3D Copy-Paste, to achieve 3D data augmentation in indoor scenes. We employ physically plausible indoor 3D object insertion to automatically generate large-scale annotated 3D objects with both plausible physical location and illumination."

Read more here

If this would be the case the only challenge that remains is collision handling of foundational objects like the floor, the walls, the ceiling and all windows when moving these photorealistic new 3D assets, which the paper also casually handles. Let's call these models 'RT3D', short for Real-Time 3D Content. Read about Walmarts RT3D announcement here to learn more ( what does this mean in short? well expect in the near future that you can actually buy the in-game toilet seat in GTA 6 through Walmart):

Walmart and Unity To Bring Immersive Commerce to Games, Virtual Worlds and Apps

However, lets continue on what we know. Erasing furniture from your space.

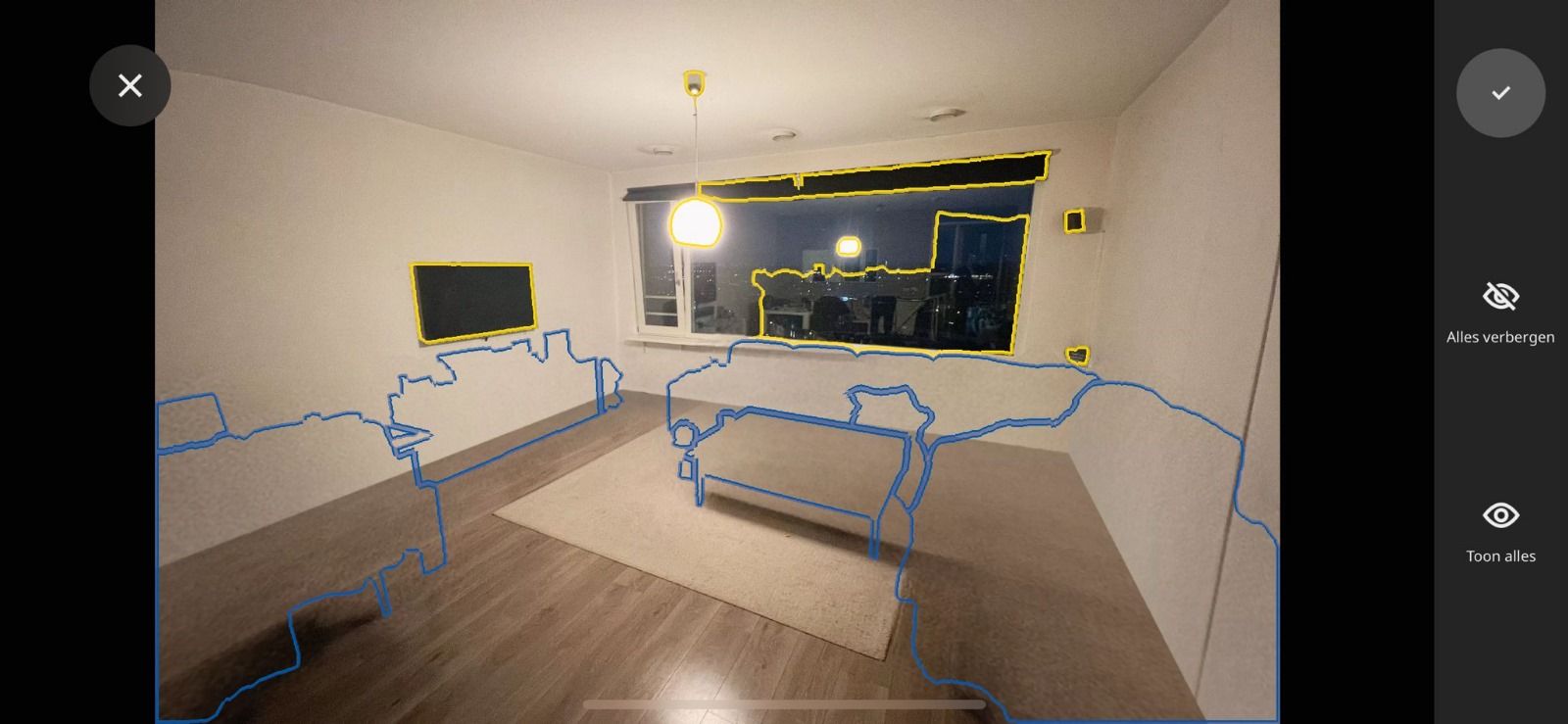

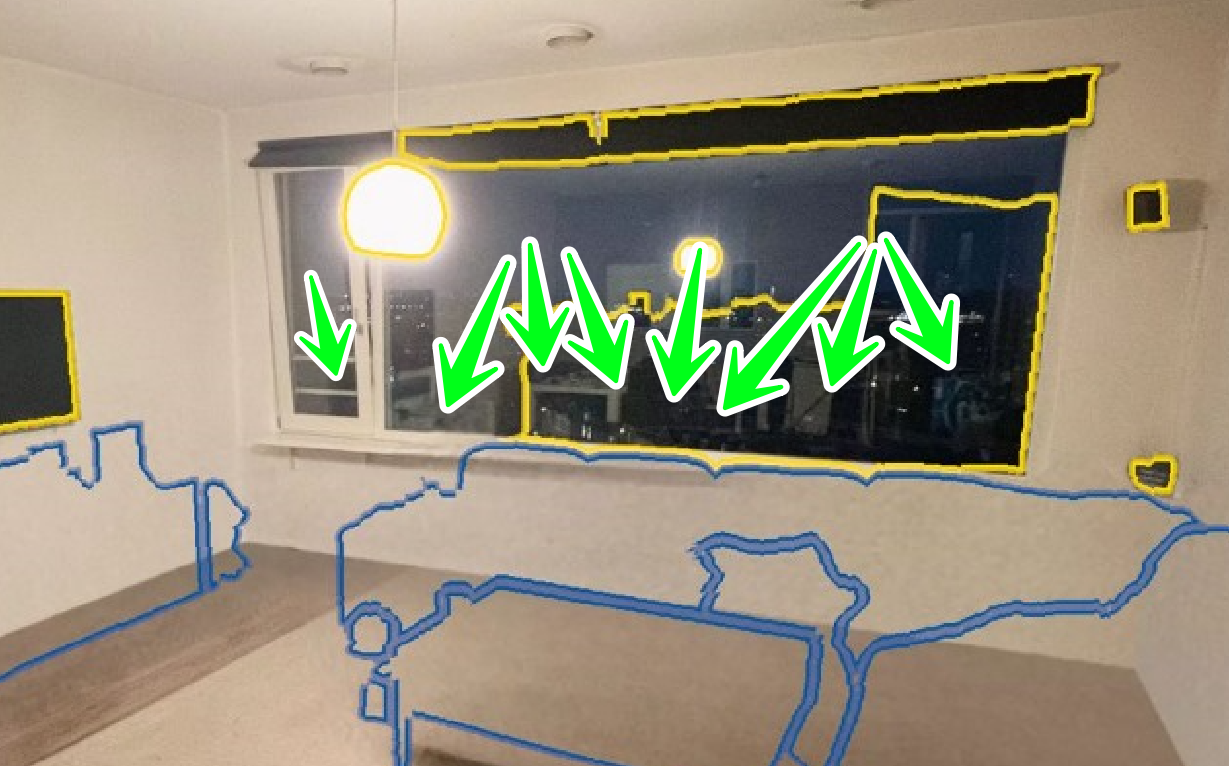

Here is an example of what the Ikea iOS app looks like when erasing furniture and adding new virtual furniture:

Source: Captured on a iPhone

Yeah, that is pretty cool... You can select your existing furniture, and delete it like its no-ones busines, sheesh, amazing nonetheless. As you can see the old furniture is replaced by smudge that tries to resemble proper fill in as best as it can. Like mentioned earlier, it sure is ugly. But hold up, it gets worse. Lets take an even closer look. The removed furniture is still visible in the reflection of the living room window and obviously vica versa is true, the newly placed 3D furniture is not visible in the window reflection either. But hey... come on... are we that up-tight of a user? A far as I know the Ikea target audiance, this is anything but a problem for Ikea, I wouldn't put a signature on the feature myself personally knowing it could harbor more developing hours then its worth.

Moving on to how you could do something similar.

Instead of getting all too technical, let me just show you by introducing you to Coral AI.

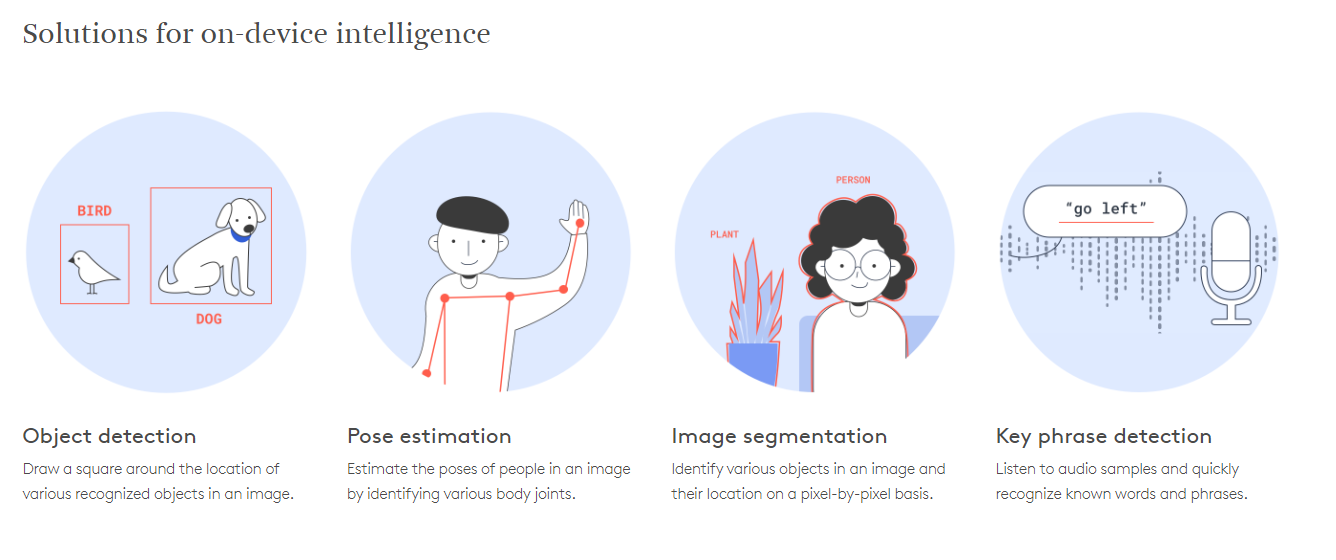

Let's have a quick glance at what Coral AI can do for us:

We are particularly interested in the Image Segmentation model.

The image classification looks a darn lot like rotoscoping, don't you agree? It is in fact rotoscoping! One of the oldest tricks in the book! I wish I could somehow let you experience the same sign and sigh of relief since we are not working with video here... Rotoscoping video's by hand is an absolute nightmare.... most of us in Europe handle 24 FPS film footage, for context sake, 24 FPS film has 6 frames less to rotoscope per one second of video footage. You can imagine rotoscoping a one minute clip, is basically hand drawing masks for 60 seconds x 24 pictures per second, amounting to 1440 total frames for one minute of video footage... 1440 unique masks, imagine if your subject moves... or worse if you have multiple objects in the shot, you'll have to go over 1440 frames twice. In this example case, we only have to work with a single still image. You'll understand why this matters the further we go into the subject, so just bear with me for a tad longer.

Let's forget about furniture for a second, let's look around us and focus on birds.

Meet Birdy.

He is our volunteer for today.

As couragious as Birdy is, he ruined the perfect shot of the treebranch...

What can we possibly do about Mister Birdy's transgression if we hand him over to,

let's say Coral.

Clicking on the button in the above example illustrates fully automated rotoscopy as discussed in part one.

Not only is it able to distinguish animals from a background but it can extract the location within the image too. Goodbye hard- and intense (manual) labourous rotoscopy!

In part three of this casestudy we will going over the magic generative fill functionality, this process will empty up the void that is left now that Bird has succesfully been eliminated from the base image using AI generative adversity models on top of these processed results.

Apply the above tool to furniture in your space and you have the desired effect just as Ikea allows you erase furniture in their wonderful app.

Image segmentation models are excellent at classifying objects on a per pixel basis, in the next part we will be retraining the AI segmentation model into a model that is trained to classify furniture! We will have to prepare the training data first too, yeah... that too.

Click here for more information about Image Segmentation Models

Here's what the 'Generative Fill' tool looks like in Adobe Photoshop, just for reference sake.

Source: Link

We won't be using Photoshop obviously but we will fill and blend up that empty space seamlessly with new pixels by networking GAN AI models over the Internet. The way Ikea's native mobile applications offer the customer total freedom in redesigning existing spaces is again in my opinion a very creative solution that deserves praise.

What's next? Part three!

We've reached the end of part two. You'll have to strap in and wait for part three!

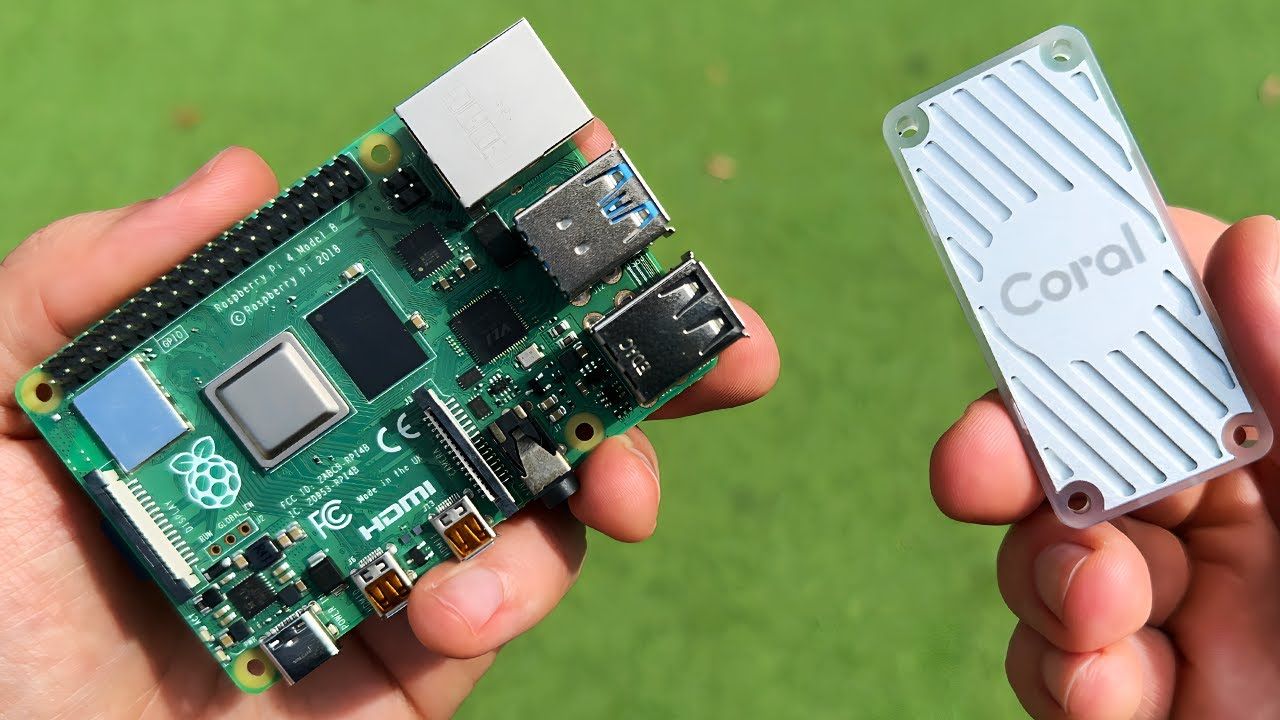

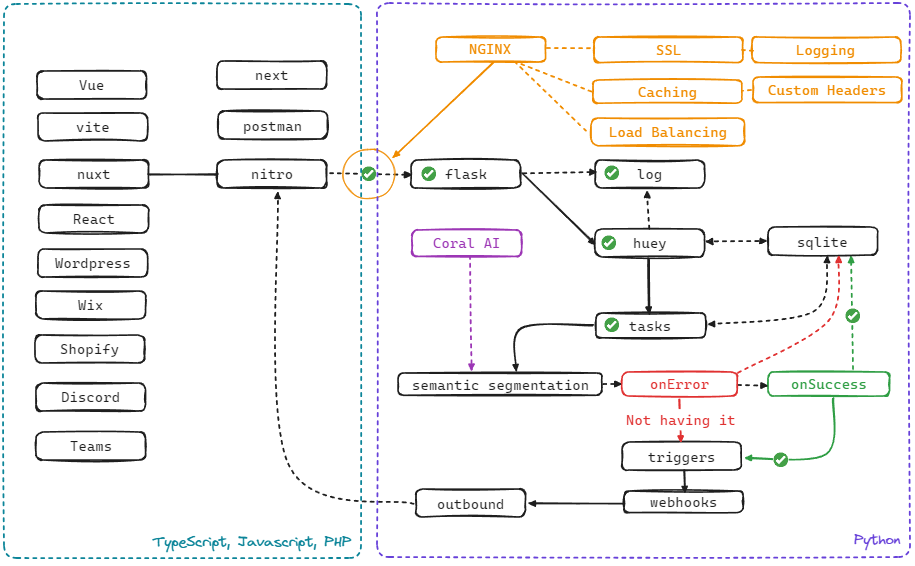

Bonus: Going into more technical details of my Coral implementation.

For rapid prototyping purposes I assigned one of my Raspberry Pi's, compiled the Coral AI Accelerator code and coded a basic job queue microservice using Flask against it for local AI development and ML (re-)training. Basically the first steps to expose the segmentation service to the internet in order for it too receive new segmentation jobs from external API services or platforms.

Flowchart of my basic Flask and Job Queue Microservice utilizing Coral.

Flowchart of my basic Flask and Job Queue Microservice utilizing Coral.

It works amazingly well locally while developing cost effective proof of concepts, tech demo's and even production ready solutions. Thinking of all the possibilities I predict 2024 to be a year where AI is going to get slapped and labeled onto every single product our eye's can see. Half of it being marketed as AI while the other half is just a repackaged, retrained or rebranded multi-purpose ML model, who's gonna tell you the difference nowadays anyway? We're all heading straight at a bump in the road in terms of AI digital services, AI Saas platforms are exploding into existance by the hour. It's yet another (arms-)race on the digital frontier towards a one stop shop, centralized hub, housing multiple multi-role models working together in perfect unison (at best), like a clockwork-machine that could do everything for you as a digital jack of all trades ninja assistent.

I would personally opt for platforms like HugginFace, Replicate and Runway when requiring beefier solutions. A direct issue however is the introduced cost of operating their stuff and the actual network traffic cost on top of that for just talking and sending data to the AI model. This deters the average joe developer.

Excited and can't wait for even more insight?

Get in touch by joining my Discord Community called 'The Foundry' and lets experiment together.

Sneak Preview: Nuxt Coolify Module

A Nuxt module for Coolify, it wraps the Coolify API and provides a set of server utilities for Nuxt and Nitro.

The Future of Space Planners - Part Three

Showcase and casestudy of 2023 tech leaping forward in 2024, focusing on AI Diffusion InPainting and automating the rotoscopy process.